This the multi-page printable view of this section. Click here to print.

Security

1 - Overview of Cloud Native Security

This overview defines a model for thinking about Kubernetes security in the context of Cloud Native security.

Warning: This container security model provides suggestions, not proven information security policies.

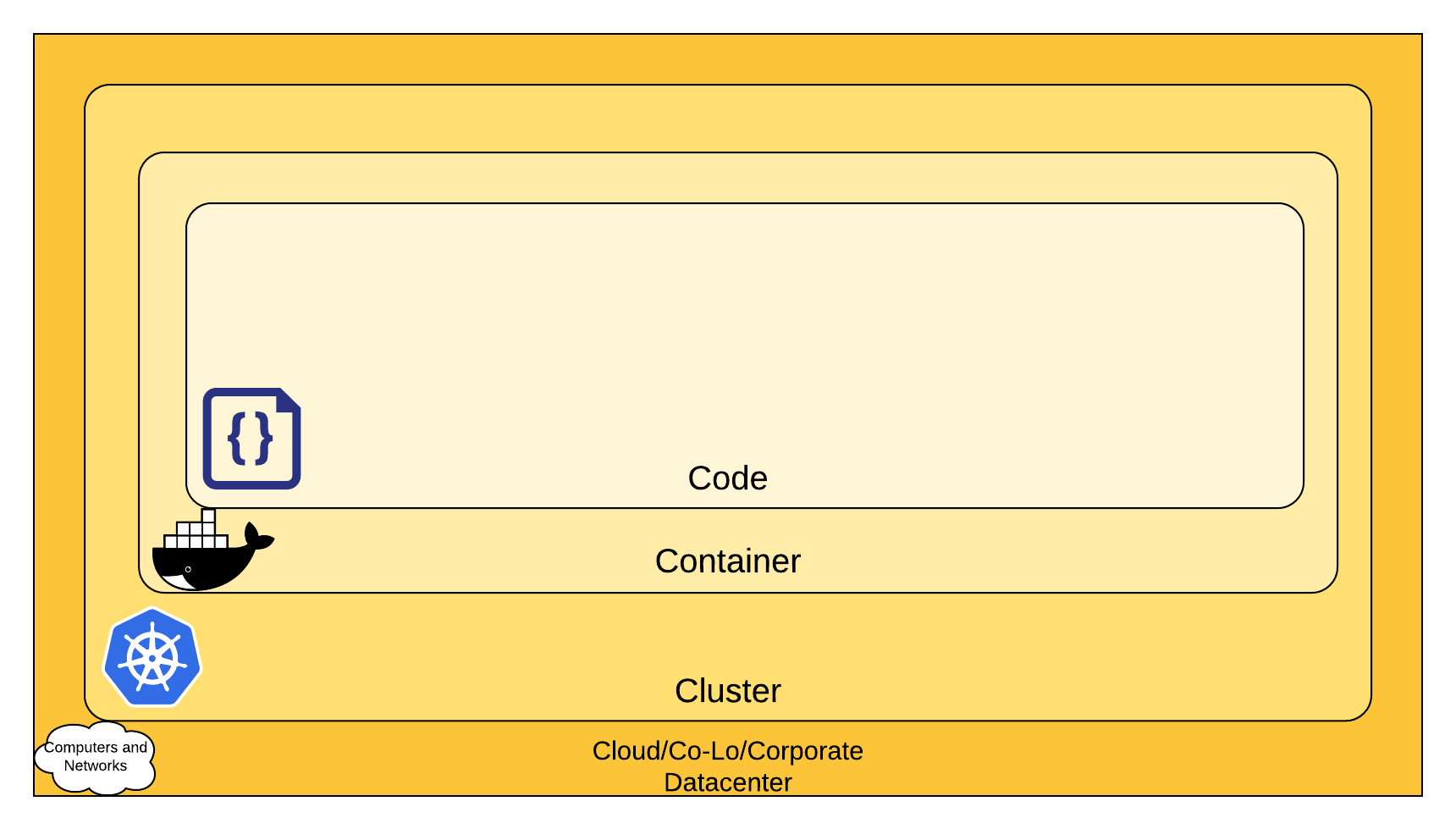

The 4C's of Cloud Native security

You can think about security in layers. The 4C's of Cloud Native security are Cloud, Clusters, Containers, and Code.

Note: This layered approach augments the defense in depth computing approach to security, which is widely regarded as a best practice for securing software systems.

The 4C's of Cloud Native Security

Each layer of the Cloud Native security model builds upon the next outermost layer. The Code layer benefits from strong base (Cloud, Cluster, Container) security layers. You cannot safeguard against poor security standards in the base layers by addressing security at the Code level.

Cloud

In many ways, the Cloud (or co-located servers, or the corporate datacenter) is the trusted computing base of a Kubernetes cluster. If the Cloud layer is vulnerable (or configured in a vulnerable way) then there is no guarantee that the components built on top of this base are secure. Each cloud provider makes security recommendations for running workloads securely in their environment.

Cloud provider security

If you are running a Kubernetes cluster on your own hardware or a different cloud provider, consult your documentation for security best practices. Here are links to some of the popular cloud providers' security documentation:

| IaaS Provider | Link |

|---|---|

| Alibaba Cloud | https://www.alibabacloud.com/trust-center |

| Amazon Web Services | https://aws.amazon.com/security/ |

| Google Cloud Platform | https://cloud.google.com/security/ |

| IBM Cloud | https://www.ibm.com/cloud/security |

| Microsoft Azure | https://docs.microsoft.com/en-us/azure/security/azure-security |

| VMWare VSphere | https://www.vmware.com/security/hardening-guides.html |

Infrastructure security

Suggestions for securing your infrastructure in a Kubernetes cluster:

| Area of Concern for Kubernetes Infrastructure | Recommendation |

|---|---|

| Network access to API Server (Control plane) | All access to the Kubernetes control plane is not allowed publicly on the internet and is controlled by network access control lists restricted to the set of IP addresses needed to administer the cluster. |

| Network access to Nodes (nodes) | Nodes should be configured to only accept connections (via network access control lists)from the control plane on the specified ports, and accept connections for services in Kubernetes of type NodePort and LoadBalancer. If possible, these nodes should not be exposed on the public internet entirely. |

| Kubernetes access to Cloud Provider API | Each cloud provider needs to grant a different set of permissions to the Kubernetes control plane and nodes. It is best to provide the cluster with cloud provider access that follows the principle of least privilege for the resources it needs to administer. The Kops documentation provides information about IAM policies and roles. |

| Access to etcd | Access to etcd (the datastore of Kubernetes) should be limited to the control plane only. Depending on your configuration, you should attempt to use etcd over TLS. More information can be found in the etcd documentation. |

| etcd Encryption | Wherever possible it's a good practice to encrypt all drives at rest, but since etcd holds the state of the entire cluster (including Secrets) its disk should especially be encrypted at rest. |

Cluster

There are two areas of concern for securing Kubernetes:

- Securing the cluster components that are configurable

- Securing the applications which run in the cluster

Components of the Cluster

If you want to protect your cluster from accidental or malicious access and adopt good information practices, read and follow the advice about securing your cluster.

Components in the cluster (your application)

Depending on the attack surface of your application, you may want to focus on specific aspects of security. For example: If you are running a service (Service A) that is critical in a chain of other resources and a separate workload (Service B) which is vulnerable to a resource exhaustion attack then the risk of compromising Service A is high if you do not limit the resources of Service B. The following table lists areas of security concerns and recommendations for securing workloads running in Kubernetes:

| Area of Concern for Workload Security | Recommendation |

|---|---|

| RBAC Authorization (Access to the Kubernetes API) | https://kubernetes.io/docs/reference/access-authn-authz/rbac/ |

| Authentication | https://kubernetes.io/docs/concepts/security/controlling-access/ |

| Application secrets management (and encrypting them in etcd at rest) | https://kubernetes.io/docs/concepts/configuration/secret/ https://kubernetes.io/docs/tasks/administer-cluster/encrypt-data/ |

| Pod Security Policies | https://kubernetes.io/docs/concepts/policy/pod-security-policy/ |

| Quality of Service (and Cluster resource management) | https://kubernetes.io/docs/tasks/configure-pod-container/quality-service-pod/ |

| Network Policies | https://kubernetes.io/docs/concepts/services-networking/network-policies/ |

| TLS For Kubernetes Ingress | https://kubernetes.io/docs/concepts/services-networking/ingress/#tls |

Container

Container security is outside the scope of this guide. Here are general recommendations and links to explore this topic:

| Area of Concern for Containers | Recommendation |

|---|---|

| Container Vulnerability Scanning and OS Dependency Security | As part of an image build step, you should scan your containers for known vulnerabilities. |

| Image Signing and Enforcement | Sign container images to maintain a system of trust for the content of your containers. |

| Disallow privileged users | When constructing containers, consult your documentation for how to create users inside of the containers that have the least level of operating system privilege necessary in order to carry out the goal of the container. |

| Use container runtime with stronger isolation | Select container runtime classes that provider stronger isolation |

Code

Application code is one of the primary attack surfaces over which you have the most control. While securing application code is outside of the Kubernetes security topic, here are recommendations to protect application code:

Code security

| Area of Concern for Code | Recommendation |

|---|---|

| Access over TLS only | If your code needs to communicate by TCP, perform a TLS handshake with the client ahead of time. With the exception of a few cases, encrypt everything in transit. Going one step further, it's a good idea to encrypt network traffic between services. This can be done through a process known as mutual or mTLS which performs a two sided verification of communication between two certificate holding services. |

| Limiting port ranges of communication | This recommendation may be a bit self-explanatory, but wherever possible you should only expose the ports on your service that are absolutely essential for communication or metric gathering. |

| 3rd Party Dependency Security | It is a good practice to regularly scan your application's third party libraries for known security vulnerabilities. Each programming language has a tool for performing this check automatically. |

| Static Code Analysis | Most languages provide a way for a snippet of code to be analyzed for any potentially unsafe coding practices. Whenever possible you should perform checks using automated tooling that can scan codebases for common security errors. Some of the tools can be found at: https://owasp.org/www-community/Source_Code_Analysis_Tools |

| Dynamic probing attacks | There are a few automated tools that you can run against your service to try some of the well known service attacks. These include SQL injection, CSRF, and XSS. One of the most popular dynamic analysis tools is the OWASP Zed Attack proxy tool. |

What's next

Learn about related Kubernetes security topics:

2 - Pod Security Standards

Security settings for Pods are typically applied by using security contexts. Security Contexts allow for the definition of privilege and access controls on a per-Pod basis.

The enforcement and policy-based definition of cluster requirements of security contexts has previously been achieved using Pod Security Policy. A Pod Security Policy is a cluster-level resource that controls security sensitive aspects of the Pod specification.

However, numerous means of policy enforcement have arisen that augment or replace the use of PodSecurityPolicy. The intent of this page is to detail recommended Pod security profiles, decoupled from any specific instantiation.

Policy Types

There is an immediate need for base policy definitions to broadly cover the security spectrum. These should range from highly restricted to highly flexible:

- Privileged - Unrestricted policy, providing the widest possible level of permissions. This policy allows for known privilege escalations.

- Baseline - Minimally restrictive policy while preventing known privilege escalations. Allows the default (minimally specified) Pod configuration.

- Restricted - Heavily restricted policy, following current Pod hardening best practices.

Policies

Privileged

The Privileged policy is purposely-open, and entirely unrestricted. This type of policy is typically aimed at system- and infrastructure-level workloads managed by privileged, trusted users.

The privileged policy is defined by an absence of restrictions. For allow-by-default enforcement mechanisms (such as gatekeeper), the privileged profile may be an absence of applied constraints rather than an instantiated policy. In contrast, for a deny-by-default mechanism (such as Pod Security Policy) the privileged policy should enable all controls (disable all restrictions).

Baseline

The Baseline policy is aimed at ease of adoption for common containerized workloads while preventing known privilege escalations. This policy is targeted at application operators and developers of non-critical applications. The following listed controls should be enforced/disallowed:

| Control | Policy |

| Host Namespaces | Sharing the host namespaces must be disallowed. Restricted Fields: spec.hostNetwork spec.hostPID spec.hostIPC Allowed Values: false |

| Privileged Containers | Privileged Pods disable most security mechanisms and must be disallowed. Restricted Fields: spec.containers[*].securityContext.privileged spec.initContainers[*].securityContext.privileged Allowed Values: false, undefined/nil |

| Capabilities | Adding NET_RAW or capabilities beyond the default set must be disallowed. Restricted Fields: spec.containers[*].securityContext.capabilities.add spec.initContainers[*].securityContext.capabilities.add Allowed Values: empty (or restricted to a known list) |

| HostPath Volumes | HostPath volumes must be forbidden. Restricted Fields: spec.volumes[*].hostPath Allowed Values: undefined/nil |

| Host Ports | HostPorts should be disallowed, or at minimum restricted to a known list. Restricted Fields: spec.containers[*].ports[*].hostPort spec.initContainers[*].ports[*].hostPort Allowed Values: 0, undefined (or restricted to a known list) |

| AppArmor | On supported hosts, the 'runtime/default' AppArmor profile is applied by default.

The baseline policy should prevent overriding or disabling the default AppArmor

profile, or restrict overrides to an allowed set of profiles. Restricted Fields: metadata.annotations['container.apparmor.security.beta.kubernetes.io/*'] Allowed Values: 'runtime/default', undefined |

| SELinux | Setting the SELinux type is restricted, and setting a custom SELinux user or role option is forbidden. Restricted Fields: spec.securityContext.seLinuxOptions.type spec.containers[*].securityContext.seLinuxOptions.type spec.initContainers[*].securityContext.seLinuxOptions.type Allowed Values: undefined/empty container_t container_init_t container_kvm_t Restricted Fields: spec.securityContext.seLinuxOptions.user spec.containers[*].securityContext.seLinuxOptions.user spec.initContainers[*].securityContext.seLinuxOptions.user spec.securityContext.seLinuxOptions.role spec.containers[*].securityContext.seLinuxOptions.role spec.initContainers[*].securityContext.seLinuxOptions.role Allowed Values: undefined/empty |

| /proc Mount Type | The default /proc masks are set up to reduce attack surface, and should be required. Restricted Fields: spec.containers[*].securityContext.procMount spec.initContainers[*].securityContext.procMount Allowed Values: undefined/nil, 'Default' |

| Sysctls | Sysctls can disable security mechanisms or affect all containers on a host, and should be disallowed except for an allowed "safe" subset.

A sysctl is considered safe if it is namespaced in the container or the Pod, and it is isolated from other Pods or processes on the same Node. Restricted Fields: spec.securityContext.sysctls Allowed Values: kernel.shm_rmid_forced net.ipv4.ip_local_port_range net.ipv4.tcp_syncookies net.ipv4.ping_group_range undefined/empty |

Restricted

The Restricted policy is aimed at enforcing current Pod hardening best practices, at the expense of some compatibility. It is targeted at operators and developers of security-critical applications, as well as lower-trust users.The following listed controls should be enforced/disallowed:

| Control | Policy |

| Everything from the baseline profile. | |

| Volume Types | In addition to restricting HostPath volumes, the restricted profile limits usage of non-ephemeral volume types to those defined through PersistentVolumes. Restricted Fields: spec.volumes[*].hostPath spec.volumes[*].gcePersistentDisk spec.volumes[*].awsElasticBlockStore spec.volumes[*].gitRepo spec.volumes[*].nfs spec.volumes[*].iscsi spec.volumes[*].glusterfs spec.volumes[*].rbd spec.volumes[*].flexVolume spec.volumes[*].cinder spec.volumes[*].cephFS spec.volumes[*].flocker spec.volumes[*].fc spec.volumes[*].azureFile spec.volumes[*].vsphereVolume spec.volumes[*].quobyte spec.volumes[*].azureDisk spec.volumes[*].portworxVolume spec.volumes[*].scaleIO spec.volumes[*].storageos Allowed Values: undefined/nil |

| Privilege Escalation | Privilege escalation (such as via set-user-ID or set-group-ID file mode) should not be allowed. Restricted Fields: spec.containers[*].securityContext.allowPrivilegeEscalation spec.initContainers[*].securityContext.allowPrivilegeEscalation Allowed Values: false |

| Running as Non-root | Containers must be required to run as non-root users. Restricted Fields: spec.securityContext.runAsNonRoot spec.containers[*].securityContext.runAsNonRoot spec.initContainers[*].securityContext.runAsNonRoot Allowed Values: true |

| Non-root groups (optional) | Containers should be forbidden from running with a root primary or supplementary GID. Restricted Fields: spec.securityContext.runAsGroup spec.securityContext.supplementalGroups[*] spec.securityContext.fsGroup spec.containers[*].securityContext.runAsGroup spec.initContainers[*].securityContext.runAsGroup Allowed Values: non-zero undefined / nil (except for `*.runAsGroup`) |

| Seccomp | The RuntimeDefault seccomp profile must be required, or allow specific additional profiles. Restricted Fields: spec.securityContext.seccompProfile.type spec.containers[*].securityContext.seccompProfile spec.initContainers[*].securityContext.seccompProfile Allowed Values: 'runtime/default' undefined / nil |

Policy Instantiation

Decoupling policy definition from policy instantiation allows for a common understanding and consistent language of policies across clusters, independent of the underlying enforcement mechanism.

As mechanisms mature, they will be defined below on a per-policy basis. The methods of enforcement of individual policies are not defined here.

FAQ

Why isn't there a profile between privileged and baseline?

The three profiles defined here have a clear linear progression from most secure (restricted) to least secure (privileged), and cover a broad set of workloads. Privileges required above the baseline policy are typically very application specific, so we do not offer a standard profile in this niche. This is not to say that the privileged profile should always be used in this case, but that policies in this space need to be defined on a case-by-case basis.

SIG Auth may reconsider this position in the future, should a clear need for other profiles arise.

What's the difference between a security policy and a security context?

Security Contexts configure Pods and Containers at runtime. Security contexts are defined as part of the Pod and container specifications in the Pod manifest, and represent parameters to the container runtime.

Security policies are control plane mechanisms to enforce specific settings in the Security Context, as well as other parameters outside the Security Context. As of February 2020, the current native solution for enforcing these security policies is Pod Security Policy - a mechanism for centrally enforcing security policy on Pods across a cluster. Other alternatives for enforcing security policy are being developed in the Kubernetes ecosystem, such as OPA Gatekeeper.

What profiles should I apply to my Windows Pods?

Windows in Kubernetes has some limitations and differentiators from standard Linux-based workloads. Specifically, the Pod SecurityContext fields have no effect on Windows. As such, no standardized Pod Security profiles currently exists.

What about sandboxed Pods?

There is not currently an API standard that controls whether a Pod is considered sandboxed or not. Sandbox Pods may be identified by the use of a sandboxed runtime (such as gVisor or Kata Containers), but there is no standard definition of what a sandboxed runtime is.

The protections necessary for sandboxed workloads can differ from others. For example, the need to restrict privileged permissions is lessened when the workload is isolated from the underlying kernel. This allows for workloads requiring heightened permissions to still be isolated.

Additionally, the protection of sandboxed workloads is highly dependent on the method of sandboxing. As such, no single recommended policy is recommended for all sandboxed workloads.

3 - Controlling Access to the Kubernetes API

This page provides an overview of controlling access to the Kubernetes API.

Users access the Kubernetes API using kubectl,

client libraries, or by making REST requests. Both human users and

Kubernetes service accounts can be

authorized for API access.

When a request reaches the API, it goes through several stages, illustrated in the

following diagram:

Transport security

In a typical Kubernetes cluster, the API serves on port 443, protected by TLS. The API server presents a certificate. This certificate may be signed using a private certificate authority (CA), or based on a public key infrastructure linked to a generally recognized CA.

If your cluster uses a private certificate authority, you need a copy of that CA

certificate configured into your ~/.kube/config on the client, so that you can

trust the connection and be confident it was not intercepted.

Your client can present a TLS client certificate at this stage.

Authentication

Once TLS is established, the HTTP request moves to the Authentication step. This is shown as step 1 in the diagram. The cluster creation script or cluster admin configures the API server to run one or more Authenticator modules. Authenticators are described in more detail in Authentication.

The input to the authentication step is the entire HTTP request; however, it typically examines the headers and/or client certificate.

Authentication modules include client certificates, password, and plain tokens, bootstrap tokens, and JSON Web Tokens (used for service accounts).

Multiple authentication modules can be specified, in which case each one is tried in sequence, until one of them succeeds.

If the request cannot be authenticated, it is rejected with HTTP status code 401.

Otherwise, the user is authenticated as a specific username, and the user name

is available to subsequent steps to use in their decisions. Some authenticators

also provide the group memberships of the user, while other authenticators

do not.

While Kubernetes uses usernames for access control decisions and in request logging,

it does not have a User object nor does it store usernames or other information about

users in its API.

Authorization

After the request is authenticated as coming from a specific user, the request must be authorized. This is shown as step 2 in the diagram.

A request must include the username of the requester, the requested action, and the object affected by the action. The request is authorized if an existing policy declares that the user has permissions to complete the requested action.

For example, if Bob has the policy below, then he can read pods only in the namespace projectCaribou:

{

"apiVersion": "abac.authorization.kubernetes.io/v1beta1",

"kind": "Policy",

"spec": {

"user": "bob",

"namespace": "projectCaribou",

"resource": "pods",

"readonly": true

}

}

If Bob makes the following request, the request is authorized because he is allowed to read objects in the projectCaribou namespace:

{

"apiVersion": "authorization.k8s.io/v1beta1",

"kind": "SubjectAccessReview",

"spec": {

"resourceAttributes": {

"namespace": "projectCaribou",

"verb": "get",

"group": "unicorn.example.org",

"resource": "pods"

}

}

}

If Bob makes a request to write (create or update) to the objects in the projectCaribou namespace, his authorization is denied. If Bob makes a request to read (get) objects in a different namespace such as projectFish, then his authorization is denied.

Kubernetes authorization requires that you use common REST attributes to interact with existing organization-wide or cloud-provider-wide access control systems. It is important to use REST formatting because these control systems might interact with other APIs besides the Kubernetes API.

Kubernetes supports multiple authorization modules, such as ABAC mode, RBAC Mode, and Webhook mode. When an administrator creates a cluster, they configure the authorization modules that should be used in the API server. If more than one authorization modules are configured, Kubernetes checks each module, and if any module authorizes the request, then the request can proceed. If all of the modules deny the request, then the request is denied (HTTP status code 403).

To learn more about Kubernetes authorization, including details about creating policies using the supported authorization modules, see Authorization.

Admission control

Admission Control modules are software modules that can modify or reject requests. In addition to the attributes available to Authorization modules, Admission Control modules can access the contents of the object that is being created or modified.

Admission controllers act on requests that create, modify, delete, or connect to (proxy) an object. Admission controllers do not act on requests that merely read objects. When multiple admission controllers are configured, they are called in order.

This is shown as step 3 in the diagram.

Unlike Authentication and Authorization modules, if any admission controller module rejects, then the request is immediately rejected.

In addition to rejecting objects, admission controllers can also set complex defaults for fields.

The available Admission Control modules are described in Admission Controllers.

Once a request passes all admission controllers, it is validated using the validation routines for the corresponding API object, and then written to the object store (shown as step 4).

API server ports and IPs

The previous discussion applies to requests sent to the secure port of the API server (the typical case). The API server can actually serve on 2 ports:

By default, the Kubernetes API server serves HTTP on 2 ports:

localhostport:- is intended for testing and bootstrap, and for other components of the master node (scheduler, controller-manager) to talk to the API

- no TLS

- default is port 8080, change with

--insecure-portflag. - default IP is localhost, change with

--insecure-bind-addressflag. - request bypasses authentication and authorization modules.

- request handled by admission control module(s).

- protected by need to have host access

“Secure port”:

- use whenever possible

- uses TLS. Set cert with

--tls-cert-fileand key with--tls-private-key-fileflag. - default is port 6443, change with

--secure-portflag. - default IP is first non-localhost network interface, change with

--bind-addressflag. - request handled by authentication and authorization modules.

- request handled by admission control module(s).

- authentication and authorization modules run.

What's next

Read more documentation on authentication, authorization and API access control:

- Authenticating

- Admission Controllers

- Authorization

- Certificate Signing Requests

- including CSR approval and certificate signing

- Service accounts

You can learn about:

- how Pods can use Secrets to obtain API credentials.